- Overview

- Technology

- Performance

- Use Case

- Contact

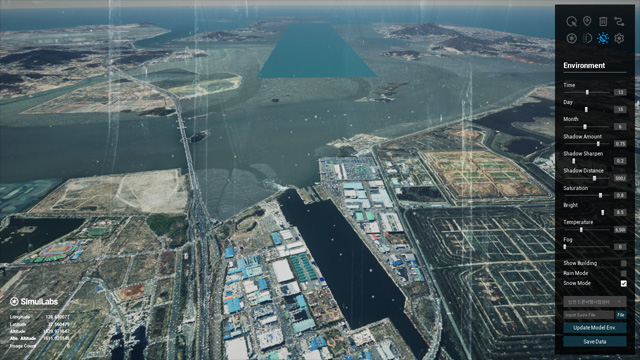

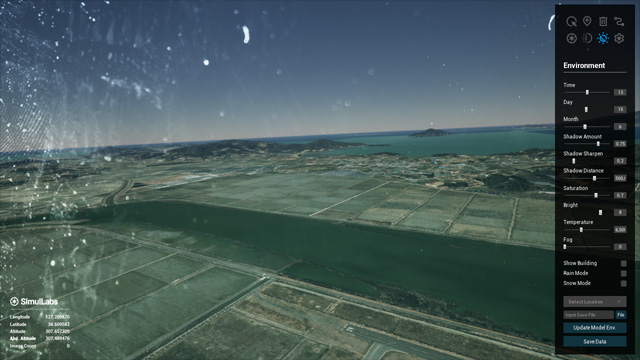

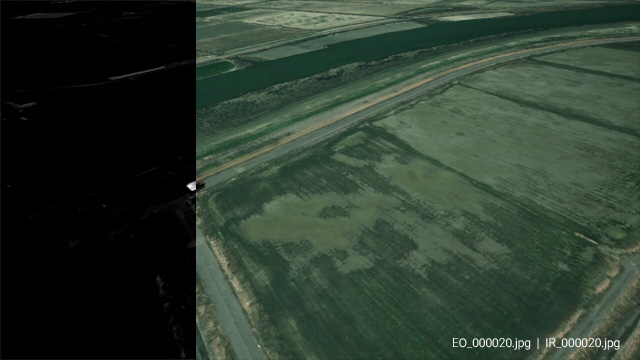

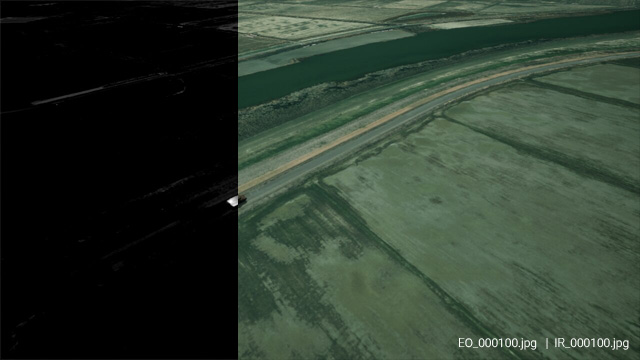

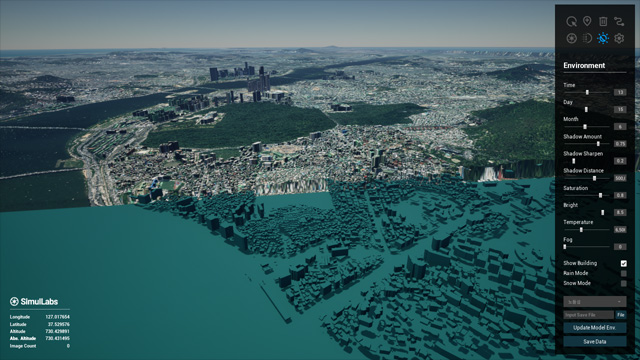

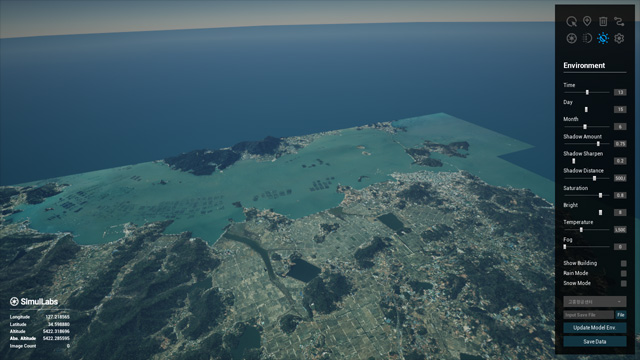

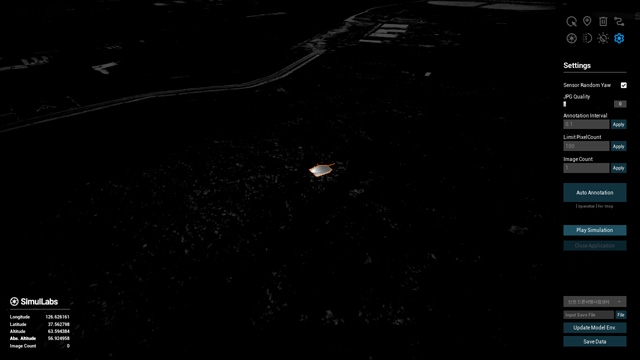

- Combines Unreal Engine`s ultra-realistic rendering with a fully custom Cesium 3D Tiles environment, including bespoke terrain and building models crafted in-house.

- Operates entirely offline or in closed networks, enabled by self-produced 3D Tiles data that remove all dependency on external Cesium servers.

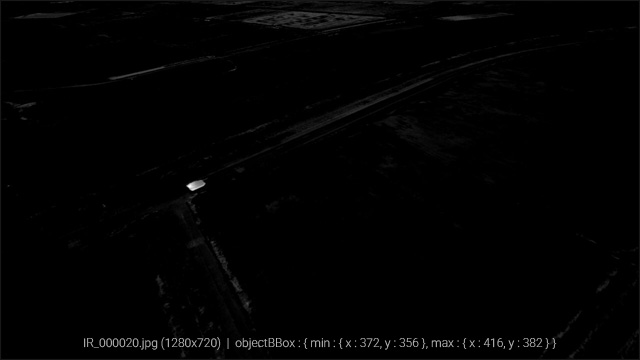

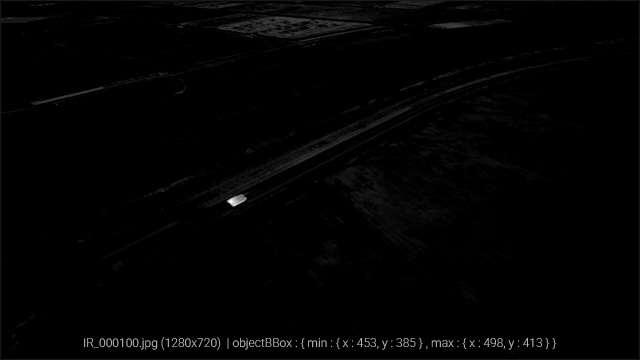

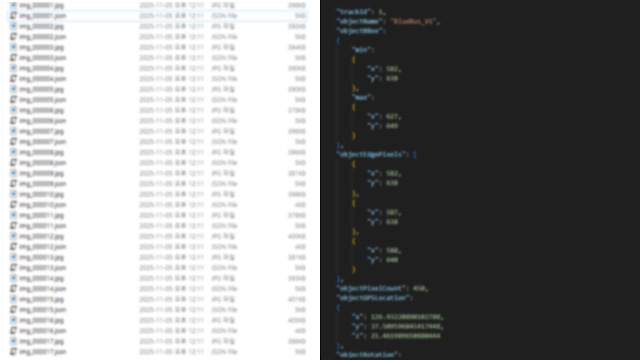

- Automatically generates essential AI training labels — LLA, BBOX, PixelCount and Segmentation — at high speed (10 FPS) with pixel-accurate precision.

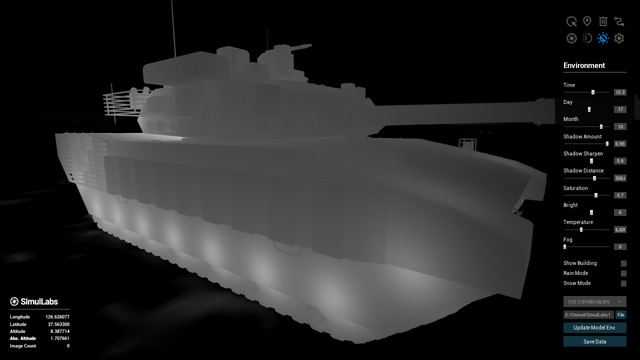

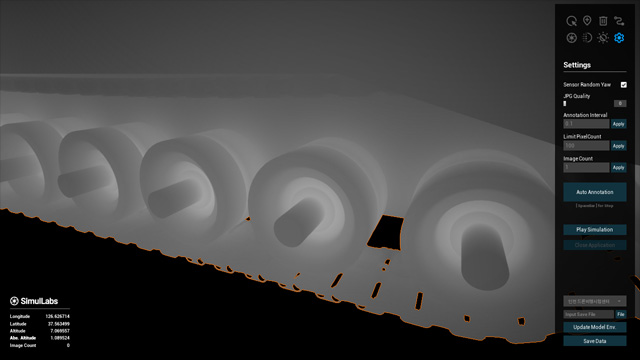

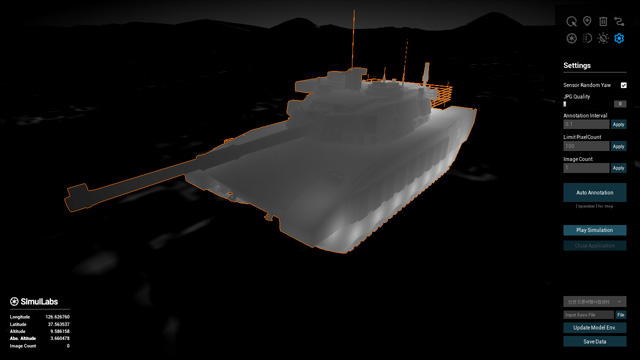

- Supports IR-style thermal rendering, pixelation, distortion, and various sensor effects to simulate realistic conditions for military, drone, and surveillance AI applications.

- The hardest problem in multi-sensor AI is not just data, but data alignment.

- We solve this with technology that delivers 'Perfect Fusion Data', bridging the gap between visible and thermal realities.

- Directly created Cesium 3D Tiles, terrain, and building assets for accurate geospatial representation.

- Supports offline operation in secure/closed network environments, independent of external servers.

- Generates infrared imagery with precise control over thermal locations, emissivity, and intensity directly via C++.

- Allows per-object and per-part thermal customization, e.g., controlling heat emission from tank wheels separately from the engine.

- Crucially, the system provides 100% accurate, non-degraded Ground Truth (Segmentation/BBOX) irrespective of severe IR artifacts like bloom or sensor noise.

- Enables accurate simulation of thermal signatures for AI model training, sensor testing, and realistic scenario evaluation.

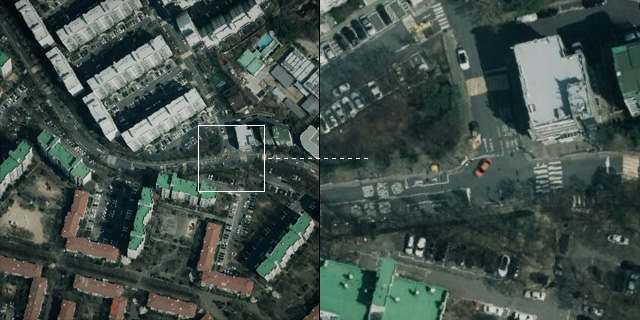

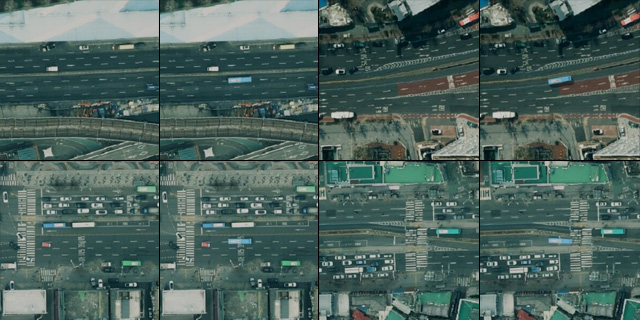

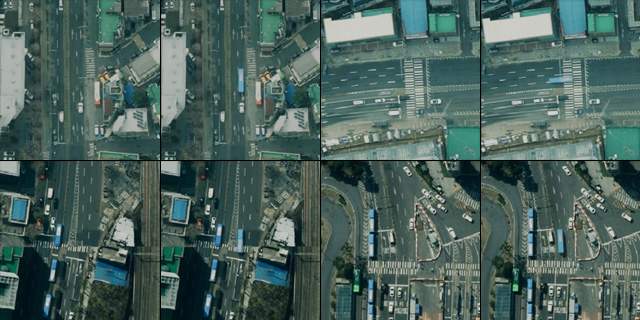

- 3D objects are adaptively degraded to match the orthophoto resolution, ensuring high photorealism when projected onto the environment.

- Automatically generates 2D projections with BBOX, Segmentation, PixelCount and LLA labels, exported as JPG and JSON at 10 FPS.

- Supports camera and object waypoint trajectories to create diverse movement scenarios for dynamic scenes.

- Applies various sensor and environmental effects including pixelation, saturation, distortion, shadows, brightness, temperature, rain, snow, lens dirt, FOV, and vignette.

- Ensures accurate segmentation and labeling of objects even under these complex visual effects, enabling robust synthetic dataset generation for AI training.

- Designed for applications in autonomous driving, robotics, and digital twin simulations, with flexible scenario customization.

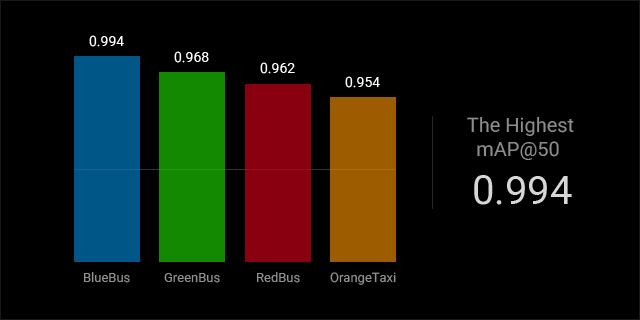

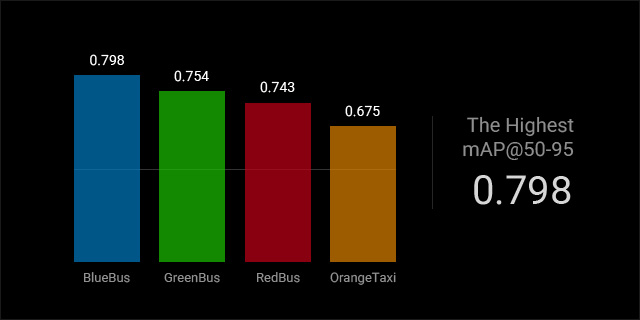

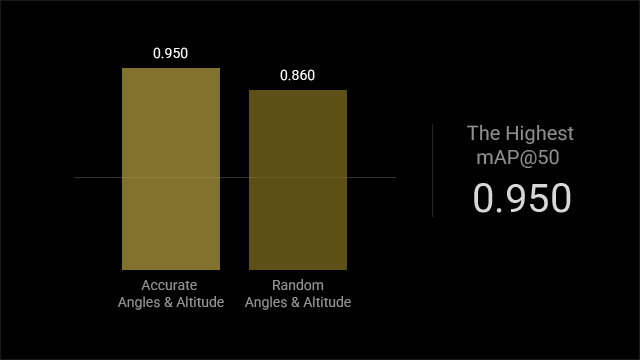

Our solution demonstrates high detection performance, trained only on synthetic data and validated on real data, covering both orthographic (top-down) view and drone view scenarios:

- Blue Bus : mAP@50 = 0.994, mAP@50-95 = 0.798 | Training : 4,000 synthetic labeled samples (1280x1280) | Validation : 1,000 real labeled samples | Object size : 440px

- Green Bus : mAP@50 = 0.968, mAP@50-95 = 0.754 | Training : 4,000 synthetic labeled samples (1280x1280) | Validation : 1,000 real labeled samples | Object size : 440px

- Red Bus : mAP@50 = 0.962, mAP@50-95 = 0.743 | Training : 4,000 synthetic labeled samples (1280x1280) | Validation : 1,000 real labeled samples | Object size : 460px

- Orange Taxi : mAP@50 = 0.954, mAP@50-95 = 0.675 | Training : 4,000 synthetic labeled samples (1280x1280) | Validation : 1,000 real labeled samples | Object size : 150px

- Tank (Accurate Angles & Altitude) : mAP@50 = 0.950, mAP@50-95 = 0.463 | Training : 500 synthetic labeled samples | Validation : 18 real labeled samples (limited due to confidentiality constraints)

- Tank (Random Angles & Altitude) : mAP@50 = 0.860, mAP@50-95 = 0.532 | Training : 1000 synthetic labeled samples | Validation : 18 real labeled samples (limited due to confidentiality constraints)

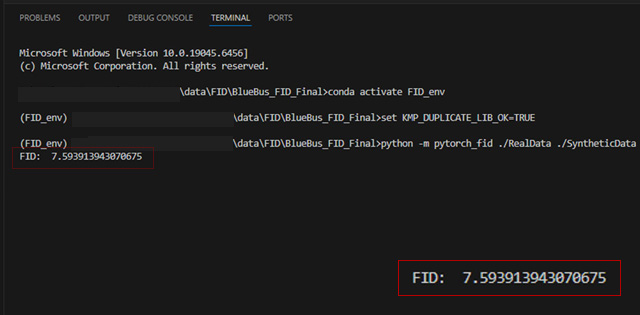

We compared 3,000 real and 3,000 synthetic object-centered patches (299x299) extracted from 1280x1280 orthographic images.

The resulting FID score of 7.59 demonstrates strong similarity between our synthetic data and real-world imagery.

We recently completed a Proof-of-Concept (PoC) project with a major domestic defense industry organization,

delivering a high-quality synthetic dataset consisting of 50,000 fully annotated images.

- 50,000 synthetic images generated for object detection and aerial-view perception

- Custom geospatial environment reconstruction based on client requirements

- Full annotation set included (bounding boxes, segmentation, metadata, LLA coordinates)

- Delivered in a format directly compatible with the client`s AI training pipeline

- Achieved within a short PoC timeline thanks to our fully offline, high-speed generation engine

This PoC demonstrated that our synthetic data pipeline can rapidly produce large-scale, defense-grade datasets while maintaining high visual fidelity and precise annotation quality.

Notably, using only our high-quality synthetic data, models trained on this dataset still achieved high real-world performance, including:

- mAP@50 up to 0.99 and mAP@50-95 up to 0.79 on real-image validation

- FID score: 7.59, measured by comparing real orthographic imagery with synthetic counterparts

These results confirm that our synthetic dataset alone is sufficient to achieve strong detection and perception performance, even in mission-critical environments.

The successful delivery validated the system`s applicability across military and security domains, including UAV perception, vehicle detection, surveillance automation, and advanced sensor simulation.